As technology continues to evolve, the role of a Database Administrator (DBA) has also evolved. A DBA is responsible for managing and maintaining databases, ensuring data integrity, and ensuring database availability. With the increasing amount of data that organizations manage, DBAs are in high demand. However, many of the tasks they perform can be automated. In this post, we will discuss 5 tasks that DBAs spend most of their time on, but can be automated.

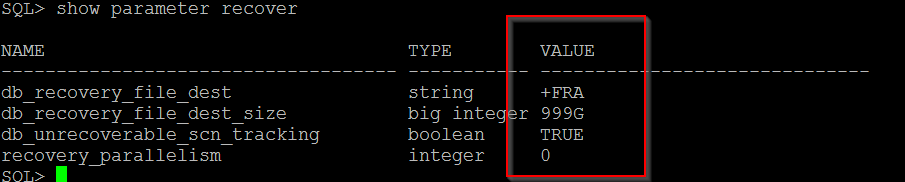

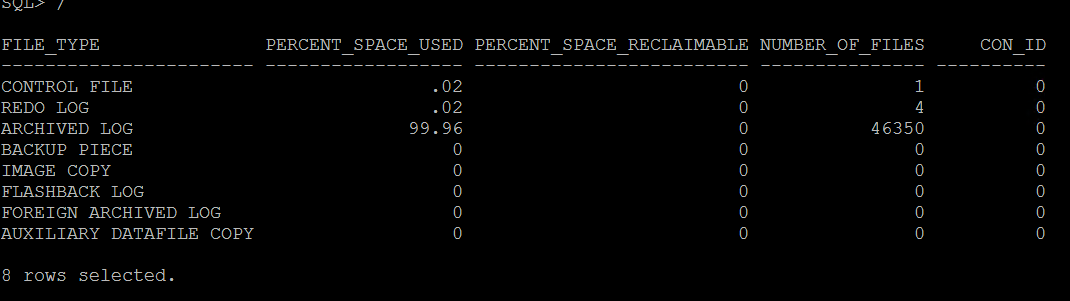

- Backup and Recovery: One of the primary responsibilities of a DBA is to ensure that data is backed up and can be recovered in the event of a disaster. However, with modern database management systems, backup and recovery processes can be automated. This can save DBAs a significant amount of time, allowing them to focus on other tasks.

- Monitoring: DBAs spend a lot of time monitoring databases to ensure that they are running smoothly. This includes monitoring performance, identifying potential issues, and making adjustments as needed. However, with the use of advanced monitoring tools, many of these tasks can be automated. Automated monitoring tools can detect issues and make adjustments in real-time, freeing up DBAs to focus on other critical tasks.

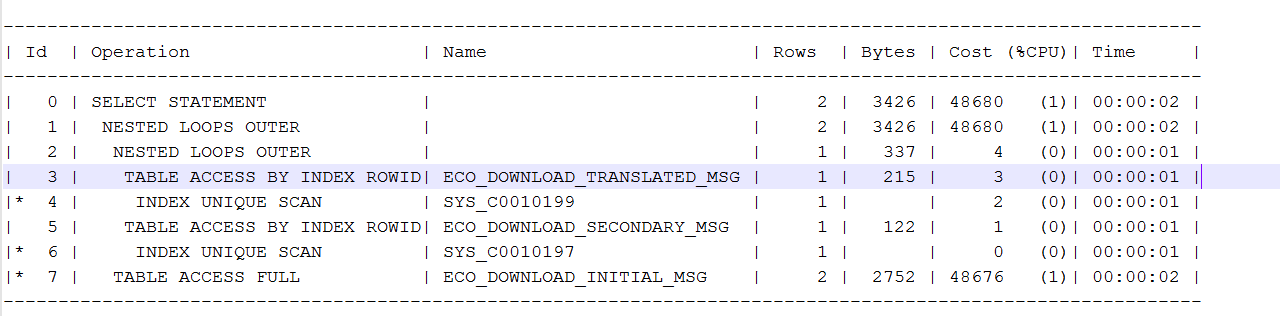

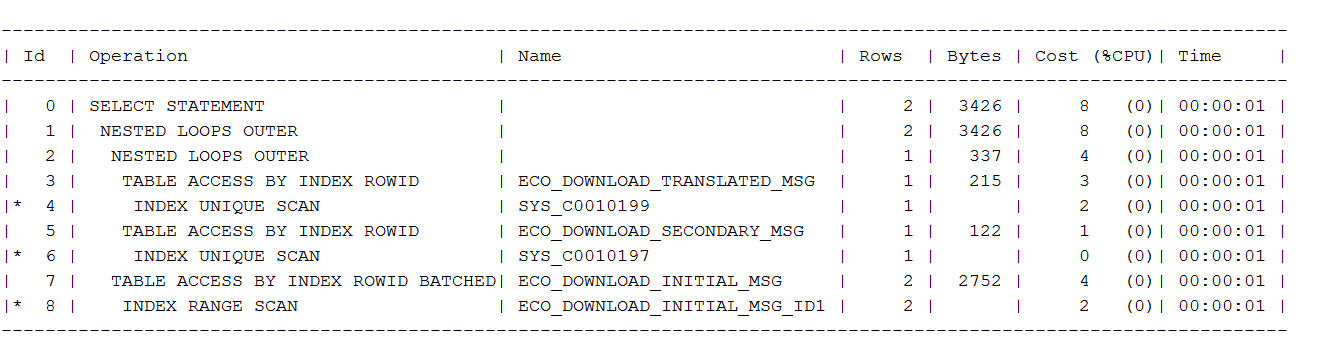

- Performance Tuning: Performance tuning is another critical task that DBAs spend a lot of time on. They need to analyze queries, identify bottlenecks, and make adjustments to improve performance. However, modern database management systems come with built-in performance tuning capabilities that can be automated. Automated performance tuning tools can analyze queries, identify bottlenecks, and make recommendations for improvements.

- Patching and Upgrades: DBAs spend a lot of time patching and upgrading databases to ensure that they are secure and up-to-date. However, patching and upgrades can be automated. Automated patching and upgrade tools can identify vulnerabilities and install updates automatically, saving DBAs a significant amount of time.

- Capacity Planning: DBAs spend a lot of time planning and managing database capacity to ensure that it meets the needs of the organization. However, with modern database management systems, capacity planning can be automated. Automated capacity planning tools can analyze database usage patterns and make recommendations for capacity planning, allowing DBAs to focus on other tasks.

While DBAs play a critical role in managing and maintaining databases, many of their tasks can be automated. Automated tools can save DBAs a significant amount of time, allowing them to focus on other critical tasks such as data modeling, data architecture, and database design. Organizations should explore the use of automation tools to improve efficiency and free up DBAs to focus on strategic initiatives that will drive business growth.

Idea is not to replace DBA jobs, but make it more effective and Valuable!!